A CORD POD needs to be connected to an upstream network to provide connectivity from within CORD to the outside world. The vRouter service in CORD is designed to enable the CORD POD to communicate with upstream routers and provide this connectivity. Currently the vRouter supports OSPF and BGP for communicating routes to and from upstream routers.

Each deployment is different in terms of protocols and features required, so this guide aims to be a general overview of how to set up CORD to communicate with external routers. The operator will have to customize the configurations to be appropriate for their deployment.

Deploying the vRouter infrastructure is a relatively manual process right now. Automating this process was not a target for the first release, but over time the process will become more automated and more in line with the rest of the CORD deployment.

Prerequisites

This guide assumes that you have run through the POD install procedure outlined here. You must also have installed the fabric ONOS cluster and connected the fabric switches to that controller.

Physical Connectivity

External routers must be physically connected to one of the fabric leaf switches. It is possible to connect to multiple routers, but currently there is a limitation that they must all be physically connected to the same leaf switch.

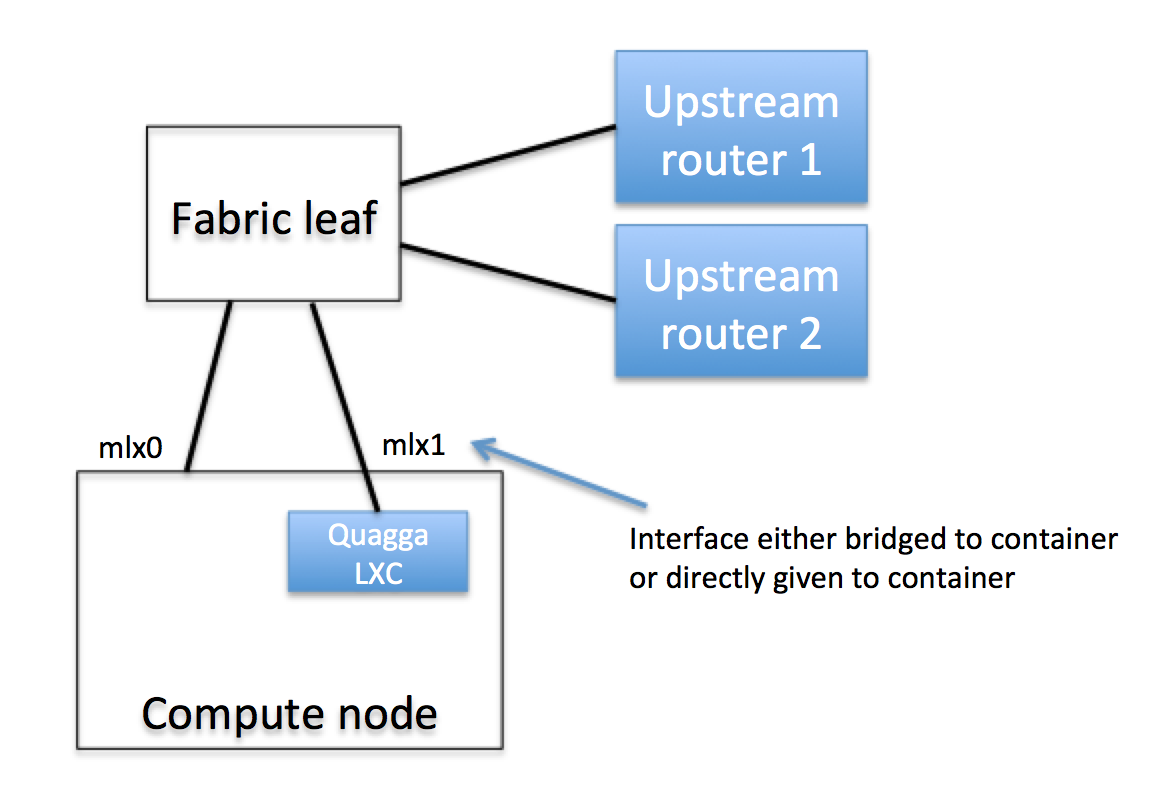

Figure 1: Physical connectivity of the upstream routers and internal Quagga host

Dedicating an interface connected to the fabric

The CORD automation determines which NICs on each compute node are connected to the fabric and puts these NICs into a bonded interface. The name of this bond is fabric, so if you run ifconfig on the compute node you have selected to deploy Quagga, you should see this bonded interface appear in the output.

ubuntu@fumbling-reason:~$ ifconfig fabric

fabric Link encap:Ethernet HWaddr 00:02:c9:1e:b4:e0

inet addr:10.6.1.2 Bcast:10.6.1.255 Mask:255.255.255.0

inet6 addr: fe80::202:c9ff:fe1e:b4e0/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:1500 Metric:1

RX packets:1048256 errors:0 dropped:42 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:89101760 (89.1 MB) TX bytes:0 (0.0 B)

We need to dedicate one of these fabric interfaces to the Quagga container, so we'll need to remove it from the bond. You should first identify the name of the interface that you want to dedicate. In this example we'll assume it is called mlx1. You can then remove it from the bond by editing the /etc/network/interfaces file:

sudo vi /etc/network/interfaces

You should see a stanza that looks like this:

auto mlx1

iface mlx1 inet manual

bond-master fabric

Simply remove the line bond-master fabric, save the file then restart the networking service or reboot the compute node.

Install Quagga

vRouter makes use of the Quagga routing protocol stacks for communicating with upstream routers. The correct version of Quagga must be deployed, connected to they physical network, and configured to peer with the upstream.

Provision a container

Quagga needs to be run in a container on one of the compute nodes in the CORD system. Eventually this would be deployed as a service in the same way as other services, but in the meantime this container must be provisioned manually. Quagga should run inside a container, because it wants to program the kernel routing table and this should be isolated from the host the container runs on. The container needs to have a direct physical connection to a fabric leaf switch (see Figure 1). This must be the same fabric leaf switch that the upstream routers are connected to. The container also needs to be able to access the management network so that it can communicate with the ONOS cluster.

The container can run on any of the nodes in the system that has a spare physical interface to support this connectivity. It's recommended to use LXC to provision a container for this purpose, and connect the physical interface directly into the container.

Install Quagga

We require a patched version of Quagga that allows Quagga to connect to the ONOS cluster, which means we need to install Quagga from source.

Quagga source can be found on their downloads page. The recommended version is 0.99.23 (quagga-0.99.23.tar.gz).

The FPM patch can be found here: fpm-remote.diff

Download the quagga source and FPM patch into the LXC container.

Then, we can extract the source, apply the patch and build Quagga:

$ tar -xzvf quagga-0.99.23.tar.gz $ cd quagga-0.99.23 $ patch -p1 < ../fpm-remote.diff $ ./configure --enable-fpm $ make $ sudo make install

Now Quagga should be built and installed in the container.

Install and Configure vRouter

You should already have an ONOS cluster with the segment routing application running that will be controlling the physical hardware switches. On this cluster we need to configure and run vRouter.

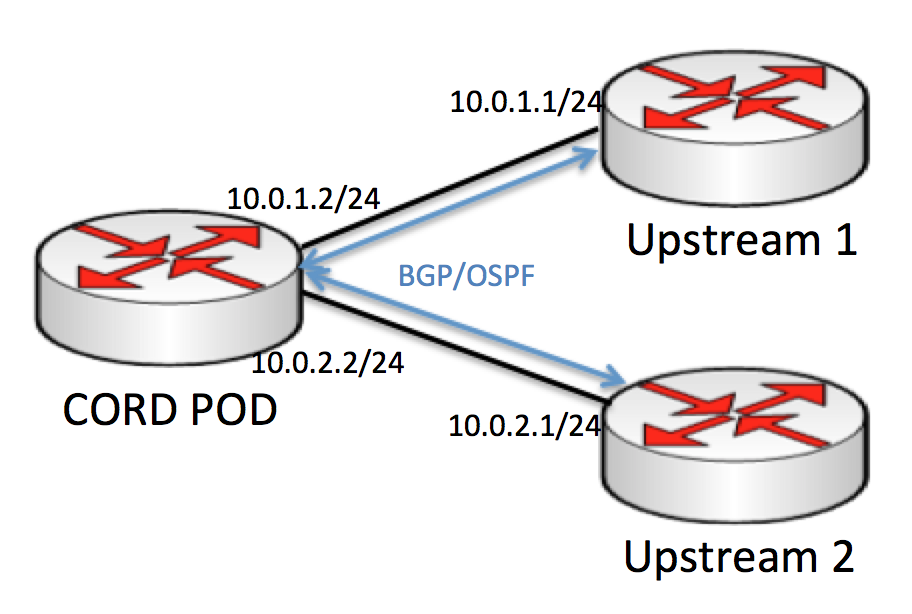

It is helpful to think of the vRouter as abstracting the entire CORD POD as a single router, and that the outside routers think that they are talking with just another router when they are communicating with the CORD pod.

For example, if we are communicating with two upstream routers, then a logical view of what we are trying to set up would look like Figure 2:

Figure 2: Logical view of a CORD POD communicating with two upstream routers

In reality, the physical implementation of that looks more like Figure 3:

Figure 3: Physical view of the CORD POD peering with two upstream routers

The control plane and the data plane of the logical CORD POD router have been separated out. The control plane is running as an instance of Quagga running in a container attached to the data plane, and the data plane is implemented by an ONOS-controlled OpenFlow switch. (Your POD may have other fabric switches that are not shown here).

Interface Configuration

Each interface on the leaf switch that connects to an upstream router needs to have an IP address (or more) that it will use to communicate with upstream routers. This IP address needs to be configured in an Interface configuration section in ONOS. For example, if you have two upstream routers and are using the IP addresses 10.0.1.2 and 10.0.2.2 to talk with them, you would apply the following configuration to the appropriate ports.

Note that the interface configuration is applied to the ports that the upstream routers are connected to (see Figure 3). We configure interfaces on these ports because ONOS is going to make it appear as though there is an IP address configured on this port of the switch (e.g. by responding to ARP requests with the configured MAC address). Again, this switch will look like a router to the external routers.

There is no interface configuration required on the port that Quagga instances connects to.

{

"ports" : {

"of:0000000000000001/1" : {

"interfaces" : [

{

"name" : "upstream1",

"ips" : [ "10.0.1.2/24" ],

"mac" : "00:00:00:00:00:01"

}

]

},

"of:0000000000000001/2" : {

"interfaces" : [

{

"name" : "upstream2",

"ips" : [ "10.0.2.2/24" ],

"mac" : "00:00:00:00:00:01"

}

]

}

}

}

- name - an arbitrary name string for the interface

- ips - a list of IP addresses configured on the interface. Configure the IP address that is being used to communicate with the upstream router that is connected to that port.

- mac - a MAC address configured on the interface. This MAC address must be the same as the MAC address on the dataplane interface of the Quagga host.

vRouter Configuration

Then, the vRouter app itself needs to be configured.

{

"apps" : {

"org.onosproject.router" : {

"router" : {

"controlPlaneConnectPoint" : "of:0000000000000001/5",

"ospfEnabled" : "true",

"interfaces" : [ "upstream1", "upstream2" ]

}

}

}

}

- controlPlaneConnectPoint - this is the port on the fabric leaf switch where the Quagga instance (in the container we deployed earlier) is connected.

- ospfEnabled - this should be set to true if you are running OSPF. If not, it can be enabled or omitted.

- interfaces - this is a list of the vRouter interfaces, which should be the interfaces that connect to the upstream routers. The names in the list reference the names of the interfaces in the previous configuration.

Segment Routing Configuration

Finally, there is some extra configuration for the segment routing app that allows it to work properly in conjunction with the vRouter:

{

"apps" : {

"org.onosproject.segmentrouting" : {

"segmentrouting" : {

"vRouterMacs" : [

"a4:23:05:34:56:78"

],

"vRouterId" : "of:0000000000000001",

"suppressSubnet" : [

"of:0000000000000001/1", "of:0000000000000001/2", "of:0000000000000001/5"

],

"suppressHostByProvider" : [

"org.onosproject.provider.host"

],

"suppressHostByPort" : [

"of:0000000000000001/1", "of:0000000000000001/2", "of:0000000000000001/5"

]

}

}

}

}

- vRouterMacs - this is a fake MAC address that ONOS will use to answer to internal hosts that need to reach the internet. This can be anything, but it needs to match the configuration of the VTN application.

- vRouterId - the device ID of the fabric leaf switch that vRouter is programming

- suppressSubnet - informs segment routing not to consider these ports as an internal subnet. This should contain all ports connected to upstream routers as well as the port where the Quagga host is connected.

- suppressHostByProvider - segment routing won't react to host events from this provider. In CORD all hosts are configured rather than learnt dynamically.

- suppressHostByPort - segment routing won't react to host events from these ports. This configuration allows us to ignore a host according to its attach point. However, if suppressHostByProvider is configured, hosts learnt from all ports will be ignored and thus suppressHostByPort will become redundant.

Run the Applications

If Segment routing was already running, it may be necessary to deactivate and reactivate it to ensure it reads the new configuration.

onos> app deactivate org.onosproject.segmentrouting onos> app activate org.onosproject.segmentrouting

The vRouter application should be activated as well.

onos> app activate org.onosproject.vrouter

Configure Quagga

At this point if ONOS is set up correctly, the internal Quagga host should be able to talk with the external upstream routers. You can verify this by pinging from the Quagga container to the upstream routers.

If that is successful, the final step is to configure Quagga to communicate with upstream routers, and also to send routes to ONOS. This configuration of Quagga is going to be highly dependent on the configuration of the upstream network, so it won't be possible to give comprehensive configuration examples here. I recommend checking out the Quagga documentation for exhaustive information on Quagga's capabilities and configuration. Here I will attempt to provide a few basic examples of Quagga configuration to get you started. You'll have to enhance these with the features and functions that are needd in your network.

Zebra Configuration

Regardless of which routing protocols you are using in your network, it is important to configure Zebra's FPM connection to send routes to the vRouter app running on ONOS. This feature was enabled by the patch that was applied earlier when we installed Quagga.

A minimal Zebra configuration might look like this:

! hostname cord-zebra password cord ! fpm connection ip 10.6.0.5 port 2620 ! end

The FPM connection IP address is the IP address of one of the ONOS instances that are running the vRouter app.

Of course if you have other configuration that needs to go in zebrad.conf you should add that here as well.

bgpd Configuration

An example simple BGP configuration for peering with two BGP peers might look like this:

hostname bgp password cord ! router bgp 65535 bgp router-id 10.0.1.2 ! network 192.168.0.0/16 ! neighbor 10.0.1.1 remote-as 65535 neighbor 10.0.1.1 description upstream1 ! neighbor 10.0.2.1 remote-as 65535 neighbor 10.0.2.1 description upstream2 !

ospfd Configuration

A simple OSPF configuration might look like this:

hostname ospf password cord ! interface eth1 ! router ospf ospf router-id 10.0.1.2 ! network 10.0.1.2/24 area 0.0.0.0 network 10.0.2.2/24 area 0.0.0.0 !

Start Quagga

The Quagga daemons must be started manually for now. By default they will start as user 'quagga', so make sure that user exists and can read and write the config files. Substitute in the correct paths to the daemons and config files if they are different.

/usr/local/sbin/zebra -f ~/zebra.conf -z /tmp/z.sock -i /tmp/z.pid -d /usr/local/sbin/bgpd -f ~/bgpd.conf -z /tmp/z.sock -i /tmp/b.pid -d /usr/local/sbin/ospfd -f ~/ospfd.conf -z /tmp/z.sock -i /tmp/o.pid -d

Quagga should now be able to communicate with the upstream routers, and should be able to learn routes and send them to ONOS. ONOS will program them in the data plane, and the internal hosts should then be able to reach the internet.

Configure the XOS vRouter Service

XOS must be configured to use the same public IP addresses that are specified in the bgpd configuration. In the example above, this address block is 192.168.0.0/16.

To configure XOS, edit the file cord-services.yaml in service-profile/cord-pod. Find the address_pool definitions. In the default XOS cord-pod configuration, these are called addresses_vsg and addresses_exampleservice. The addresses property of these address pools must like within the same subnet specified in the bgpd configuration, and they must not overlap. It's alright to subdivide the bgpd address block into smaller non-overlapping subnets. For example, you could split 192.168.0.0/16 into 192.168.0.0/15 and 192.168.128.0/15.