We can setup different environments for development work. So far, we have two choices, one is cord-in-a-box (CiaB), another is just a single VM for local development.

Choice 1: Setup CiaB based development environment.

Download and run the cord-in-a-box.sh script on the target server. The script's output is displayed and also saved to ~/cord/install.out:

$ curl -o ~/cord-in-a-box.sh https://raw.githubusercontent.com/opencord/cord/cord-3.0/scripts/cord-in-a-box.sh $ bash ~/cord-in-a-box.sh -p mcord

The script takes a long time (at least two hours) to run. Be patient! If it hasn't completely failed yet, then assume all is well!

Development Workflow on CiaB

CORD-in-a-Box is a useful environment for integration testing and debugging. A typical scenario is to find a problem, and then rebuild and redeploy some XOS containers (e.g., a service synchronizer) to verify a fix. A workflow for quickly rebuilding and redeploying the XOS containers from source is:

Make changes in your source tree, under:

~/cord/orchestration/xos*

Login to the corddev VM by using

$ ssh corddev

and:

$ cd /cord/build

First, if you made any changes to a profile (e.g., you added a new service), you'll need to re-sync the configuration from the build node to the head node. To do this run:

$ ./gradlew -PdeployConfig=config/mcord_in_a_box.yml PIprepPlatform

Then re-build and re-publish new images:

$ ./gradlew -PdeployConfig=config/mcord_in_a_box.yml :platform-install:buildImages $ ./gradlew -PdeployConfig=config/mcord_in_a_box.yml :platform-install:publish $ ./gradlew -PdeployConfig=config/mcord_in_a_box.yml :orchestration:xos:publish

Now the new XOS images should be published to the registry on prod. To bring them up, login to the prod VM and define these aliases:

CORD_PROFILE=$( cat /opt/cord_profile/profile_name ) alias xos-pull="docker-compose -p $CORD_PROFILE -f /opt/cord_profile/docker-compose.yml pull" alias xos-up="docker-compose -p $CORD_PROFILE -f /opt/cord_profile/docker-compose.yml up -d --remove-orphans" alias xos-teardown="pushd /opt/cord/build/platform-install; ansible-playbook -i inventory/head-localhost --extra-vars @/opt/cord/build/genconfig/config.yml teardown-playbook.yml; popd" alias xos-launch="pushd /opt/cord/build/platform-install; ansible-playbook -i inventory/head-localhost --extra-vars @/opt/cord/build/genconfig/config.yml launch-xos-playbook.yml; popd" alias compute-node-refresh="pushd /opt/cord/build/platform-install; ansible-playbook -i /etc/maas/ansible/pod-inventory --extra-vars=@/opt/cord/build/genconfig/config.yml compute-node-refresh-playbook.yml; popd"

To pull new images from the database and launch the containers, while retaining the existing XOS database, run:

$ xos-pull; xos-up

Alternatively, to remove the XOS database and reinitialize XOS from scratch, run:

$ xos-teardown; xos-pull; xos-launch; compute-node-refresh

Adding Tenants and Instances in TOSCA

You must onboard any tenant or instance TOSCA definitions separately from the rest of your service definitions. This is because in the build sequence above, the compute node is reprovisioned last, meaning that any instances that you define will not have any compute node to host them at the time the TOSCA is compiled. Therefore, for proper functionality/behavior, it is recommended that any TOSCA tenants or instances are defined in cord/build/platform-install/roles/test-mcord-tenants-config/templates/onboard-mcord-tenants.yaml.j2. To onboard this file, simply add and run the ansible playbook alias below from the prod VM.

$ alias deploy-tenants="pushd /opt/cord/build/platform-install; ansible-playbook -i inventory/head-localhost --extra-vars @/opt/cord/build/genconfig/config.yml mcord-tenant-playbook.yml; popd" $ deploy-tenants

Choice 2: Deploy Locally on a VM

If you are doing work that does not involve OpenStack you can create a development environment inside a VM on your local machine. This environment is mostly designed to do GUI, APIs and modeling related work. It can also be useful to test a synchronizer whose job is synchronizing data using REST APIs.

*Note - This quick start guide has only been tested against Vagrant (v1.9.5) and VirtualBox (v5.1.22), specifically on MacOS.

The following dependencies/prerequisites are required to implement this approach:

- Vagrant

- VirtualBox

- repo

Installing the Dependencies

The latest versions of each software can be found on their support pages, however homebrew can do it for us:

$ brew cask install vagrant $ brew cask install virtualbox $ brew install repo

If you do not have homebrew available, please download Vagrant and VirtualBox. Below are instructions on how to pull repo's source code directly. Since CORD consists of 40+ repositories and changes can easily span multiple repositories, we use the repo tool to help us manage this complexity.

$ cd /usr/bin $ sudo wget https://storage.googleapis.com/git-repo-downloads/repo $ sudo chmod a+x /usr/bin/repo

Setting Up the Environment

Create a dedicated directory for the CORD source code and use repo to pull the source code from gerrit

$ mkdir cord && cd cord $ repo init -u https://gerrit.opencord.org/manifest -b cord-3.0 $ repo sync

Start & Connect to the Head-Node VM

We provide a Vagrant VM where a container housing the XOS orchestrator resides. In the Cord-in-a-Box implementation, we can also start containers for ONOS, OpenStack, and MAAS (Metal-as-a-Service), however CiaB installation can take upwards of 2 hours to complete. This guide is meant for GUI, service profiles, and API related development to avoid the unnecessary overhead of the other components. The Vagrantfile for this VM is located in the build/platform-install directory. Below are the results after running 'vagrant status':

$ cd build/platform-install $ vagrant status Current machine states: head-node not created (virtualbox) The environment has not yet been created. Run `vagrant up` to create the environment. If a machine is not created, only the default provider will be shown. So if a provider is not listed, then the machine is not created for that environment.

Once the VM is up and provisioned, vagrant status will look like:

$ vagrant up $ vagrant status Current machine states: head-node running (virtualbox) The VM is running. To stop this VM, you can run `vagrant halt` to shut it down forcefully, or you can run `vagrant suspend` to simply suspend the virtual machine. In either case, to restart it again, simply run `vagrant up`

Now, we can connect to the VM

$ vagrant ssh head-node

Welcome to Ubuntu 14.04.5 LTS (GNU/Linux 3.13.0-119-generic x86_64)

* Documentation: https://help.ubuntu.com/

System information as of Thu Jun 8 22:06:16 UTC 2017

System load: 0.0

Usage of /: 22.6% of 39.34GB

Memory usage: 18%

Swap usage: 0%

Processes: 126

Users logged in: 0

IP address for eth0: 10.0.2.15

IP address for eth1: 192.168.46.100

IP address for docker0: 172.17.0.1

IP address for br-f125dd900ed3: 172.18.0.1

Graph this data and manage this system at:

https://landscape.canonical.com/

Get cloud support with Ubuntu Advantage Cloud Guest:

http://www.ubuntu.com/business/services/cloud

0 packages can be updated.

0 updates are security updates.

New release '16.04.2 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Last login: Thu Jun 8 22:06:18 2017 from 10.0.2.2

vagrant@vagrant-ubuntu-trusty-64:~$

Start the XOS Container w/ M-CORD Profile

Most profiles are run by specifying an inventory file when running ansible-playbook. For most frontend or mock profiles, you'll want to run the deploy-xos-playbook.yml playbook. To run the M-CORD profile, run:

$ cd cord/build/platform-install $ ansible-playbook -i inventory/mock-mcord deploy-xos-playbook.yml

More information on deploying other CORD profiles can be found here: https://github.com/opencord/platform-install#development-loop.

Not in `docker` Usergroup

Your user may not be a member of the `docker` user group when you first connect to the head-node VM. This can cause a permissions error with regards to the Dockerfile inside the head-node. To be added to the user group, simply log out, and log back into the VM. You can verify your membership by running the `groups` command.

Connecting to the GUI

Deploying the M-CORD profile for the first time will take close to 20 minutes in order to set up the development environment. Once complete, you can connect to the XOS GUI running in the head-node. In the Vagrantfile, note that the VM's IP address is 192.168.46.100. You can also confirm this using 'ifconfig' in the VM.

$ ifconfig

eth1 Link encap:Ethernet HWaddr 08:00:27:82:75:9a

inet addr:192.168.46.100 Bcast:192.168.46.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fe82:759a/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4873 errors:0 dropped:0 overruns:0 frame:0

TX packets:4480 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:597993 (597.9 KB) TX bytes:4211482 (4.2 MB)

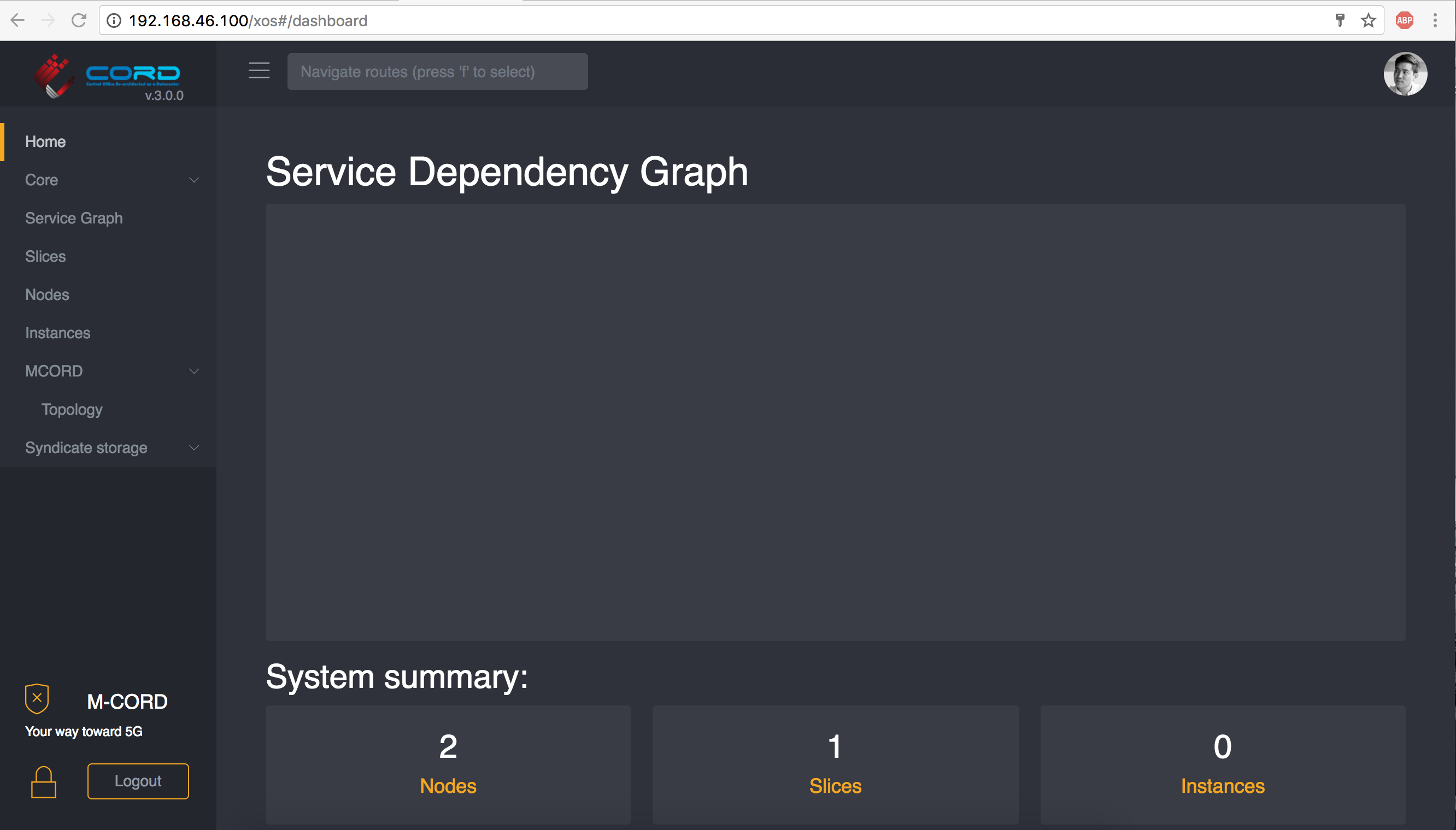

Your host machine and the VM are connected using a VirtualBox network interface created upon the launch of the VM. This means that you can connect to the XOS GUI by pointing your host machine's browser to 192.168.46.100/xos/. The username is xosadmin@opencord.org, and the password is auto generated in the /cord/build/platform-install/credentials/xosadmin@opencord.org file. The main page for the XOS GUI will look like this after log-in.

As you can see, the M-CORD profile has clearly been launched. Also, note that the service dependency graph is empty. This is because, as explained earlier, this is meant to be a bare installation of M-CORD. Should you want to proceed to a full CiaB run-through, see choice 1 above or use this guide, https://github.com/opencord/cord/blob/cord-3.0/docs/quickstart.md.

When you are done using the VM, tear down the profile, exit the VM, and shut it down.

$ ansible-playbook -i inventory/mock-mcord teardown-playbook.yml $ exit logout Connection to 192.168.46.100 closed. $ vagrant halt